Surgical Video Processing and Assessment

A complex and collaborative tool that enables the most vital operations of the C-SATS division of Johnson & Johnson, processing and sending surgical videos out for assessment of surgical skill, and my role in increasing C-SATS’ surgical case processing capacity through design.

Completely redesign the media queue's architecture and processes to be collaborative, efficient, and intuitive

Improve experience and efficiency for current media managers

Scale the media queue's capacity with the business (more videos to process AND more media managers to use the tool)

Build in ability to handle more videos, multiple people working collaboratively, and be easily adapted to by new hires

My Tasks

Massively complex enterprise system built onto a data model that the entire business depended on remaining constant—C-SATS technical feedback to surgeons and case reports are valid in comparison only when this data model is preserved

Wherever possible, I used legacy styling and components rather than improving visual polish beyond the baseline for usability, to keep scope down due to heavy back-end lift

Challenges and Restrictions

⚠️Warning! The following process documentation includes images taken from surgical videos, including organs, blood, and medical instruments.

Original Goal

10%

reduction of human time spent processing each video

Actual Results

75%

reduction of human time spent processing each video

01

Company Background

What is C-SATS?

C-SATS a Johnson & Johnson company, is a surgical education platform. Surgeons send their surgical videos in for assessment, requiring a robust internal system to process videos and output case reports.

Videos would come in from the OR, be processed through a series of steps to anonymize and assess procedure footage, and be delivered as case reports to surgeons, in a field where it was previously notoriously difficult to receive objective surgical feedback.

C-SATS is the first company to make objective scoring and expert qualitative feedback scalable by leveraging a HIPAA-compliant online platform and validated crowd-scoring.

02

The Project

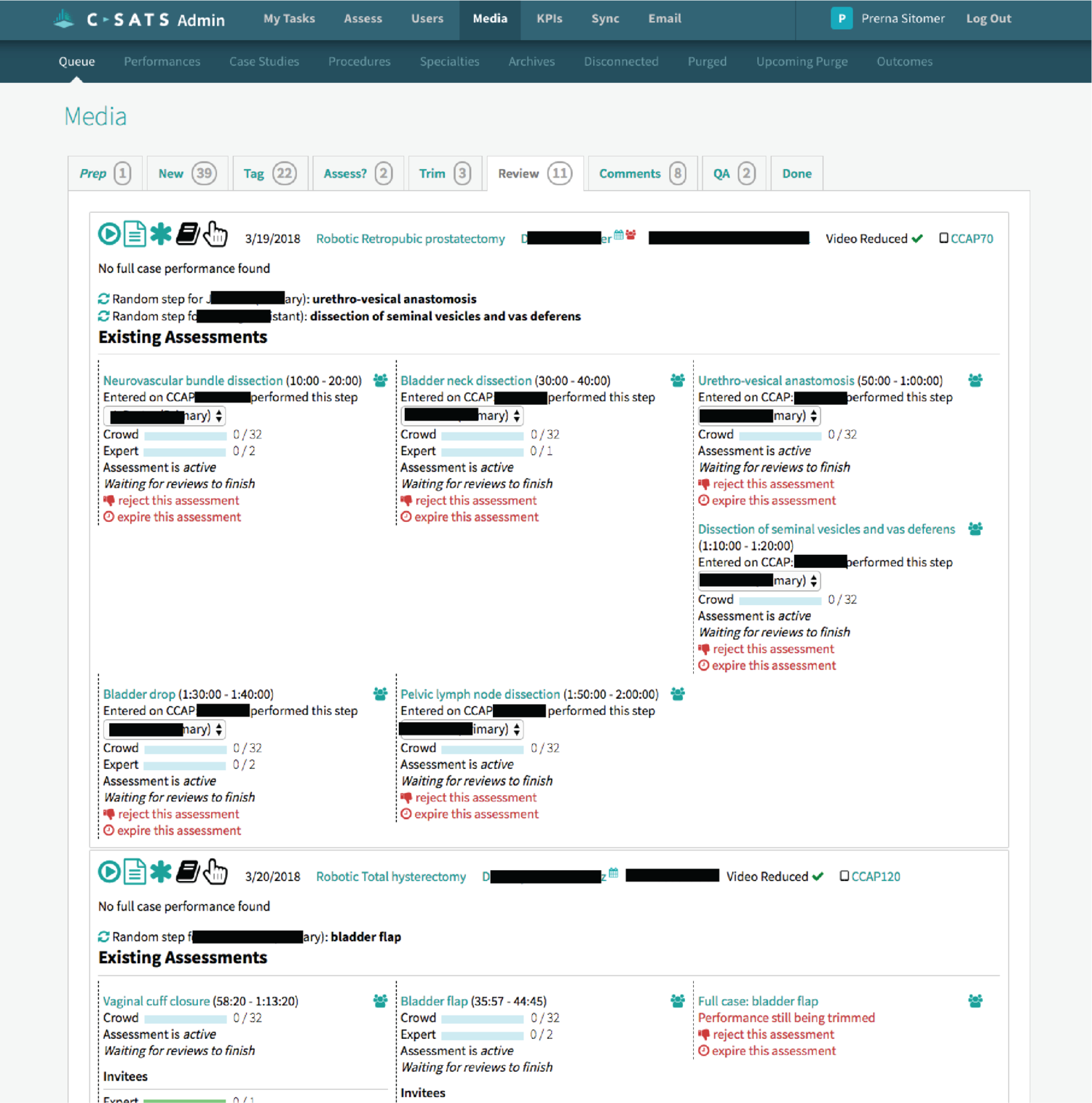

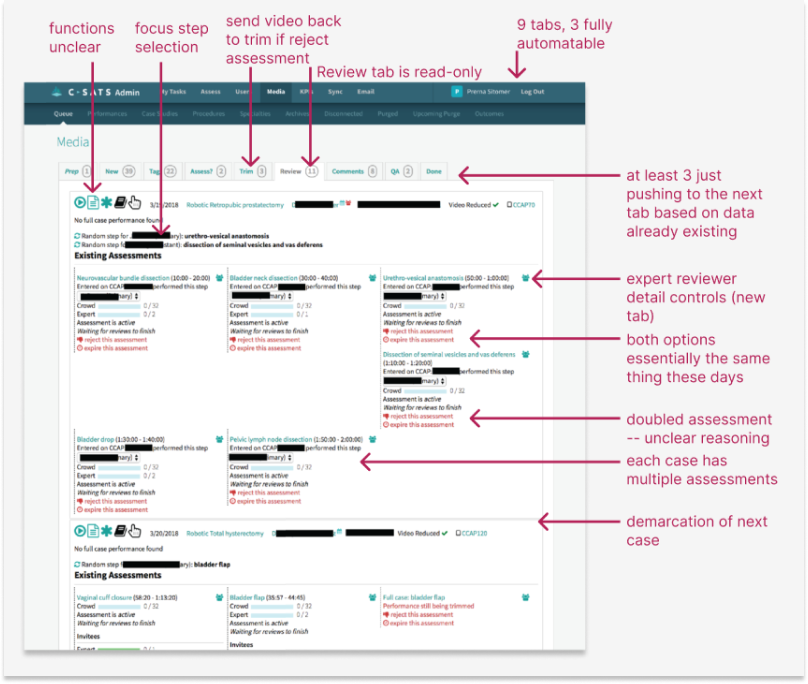

This is a screen from the original Media Queue, created and modified by the C-SATS developers for two years before I redesigned it completely.

What is the Media Queue?

The Media Queue is the full internal circulatory system of C-SATS. It is the system by which all C-SATS videos are processed and technical and qualitative assessments are generated.

The original media queue, which had been created and added to since C-SATS’ conception without any holistic design thinking or any designer on the job, had:

A separate tab for every task per video

Little to no grouping of similar tasks according to the workflow

Little to no automation of simple, automatable tasks

No capability for multiple media managers to work collaboratively

No way to filter through incoming case videos

Unintuitive (and often hidden) placement and signage of key tasks.

Of the 9 tabs, 4 contained only a single, automatable step. 2 were obsolete. 3 required opening multiple new tabs to process videos. All were incredibly difficult to learn, because key complex tasks were hidden under generic emojis or links.

03

Initial Research Sessions

A sample of my notes from interviewing each manager, from which I generated insights about the function and usability of each step in the queue.

Observation

I observed each media manager for an hour at a time to determine the time and clicks spent per video, per stage in the media queue.

Interviews

I held extended interviews with each media manager on the pain points, frustrations, goals, and functions of each step of queue, and referenced past interviews and conversations with surgeons and expert reviewers.

Media managers process cases and send them to surgeons and expert reviewers.

04

Findings

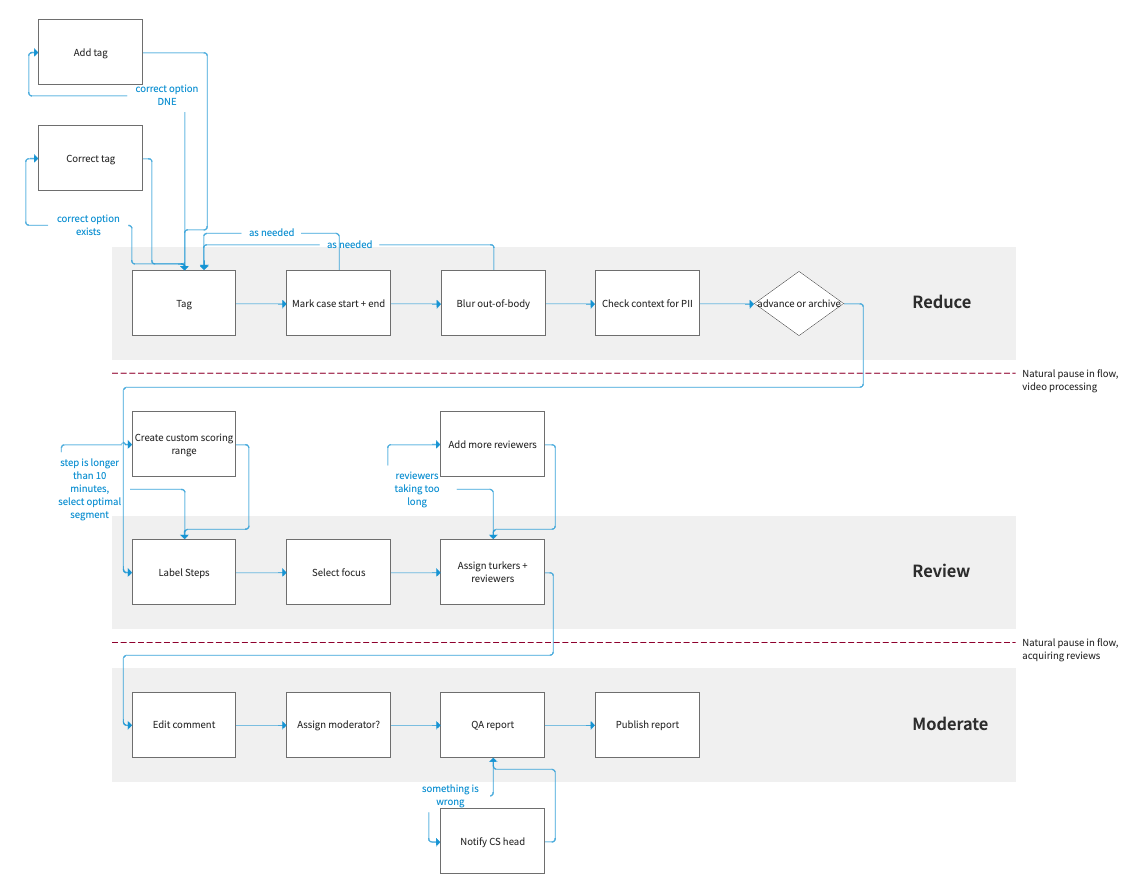

There is a big opportunity for automation of several steps, which simply involve pushing cases to the next appropriate state.

Finding 1

The old media queue is not a collaborative tool. Often, media managers will create accidental duplicates or corrupt files by attempting to work on the same case, and the queue gives no indication of who is doing what.

Finding 2

Sometimes, cases from a certain hospital or discipline need to be prioritized that day, and it’s very difficult in the old queue to pick them out from the entire set.

Finding 3

There are natural breakpoints in the media processing flow, where cases need to be reviewed or videos are being reduced.

Finding 4

Many primary actions the media managers need to take are hidden, not visually prioritized, require workarounds to complete, or link to separate tabs in the old queue.

Finding 5

There are some steps in media processing that naturally go together, such as verifying case information and blurring any out-of-body footage.

Finding 6

There is opportunity for performing surgeons to receive their case reports faster.

Finding 7

There is opportunity for cases to be made available faster for expert reviewers, who would often complain of lack of case availability.

Finding 8

05

Streamline the Flow

Diagramming the Flow

After observing the media managers working over several sessions, this is the grouping of tasks and natural breaks in the workflow I discovered. More than that - determining which tasks were actually necessary to the workflow, since more often than not, they were buried/inaccessible.

My Grouping Concept

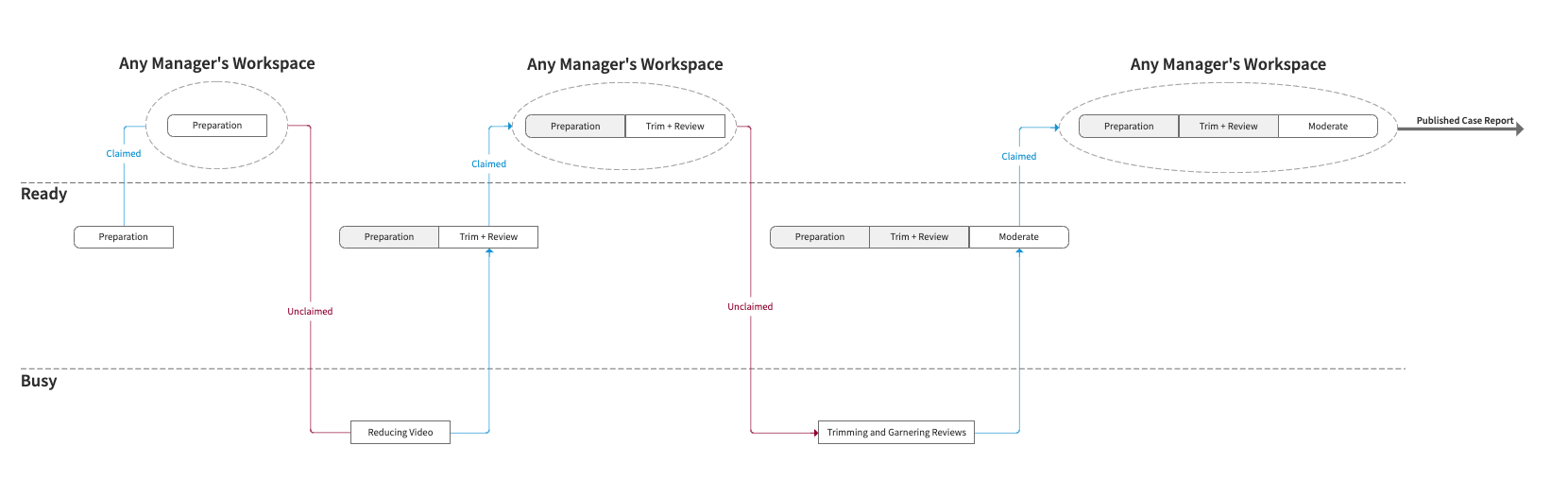

All human-executed tasks would be performed on a single case until a break for computer processing was needed, at which point the media manager would move to another case, allowing the system to automatically advance the case to the next stage once processing was complete.

06

Making a Co-Working Tool

“Claiming” Cases

I designed a system of each manager “claiming” the videos working on, to prevent the crashes and duplicate assessments that kept happening in the old queue.

Passing Notes

This would require an indication of which manager was working on a case at a time. This is where I also added a concept of “notes for media managers” to pass cases between each other.

07

Ideation

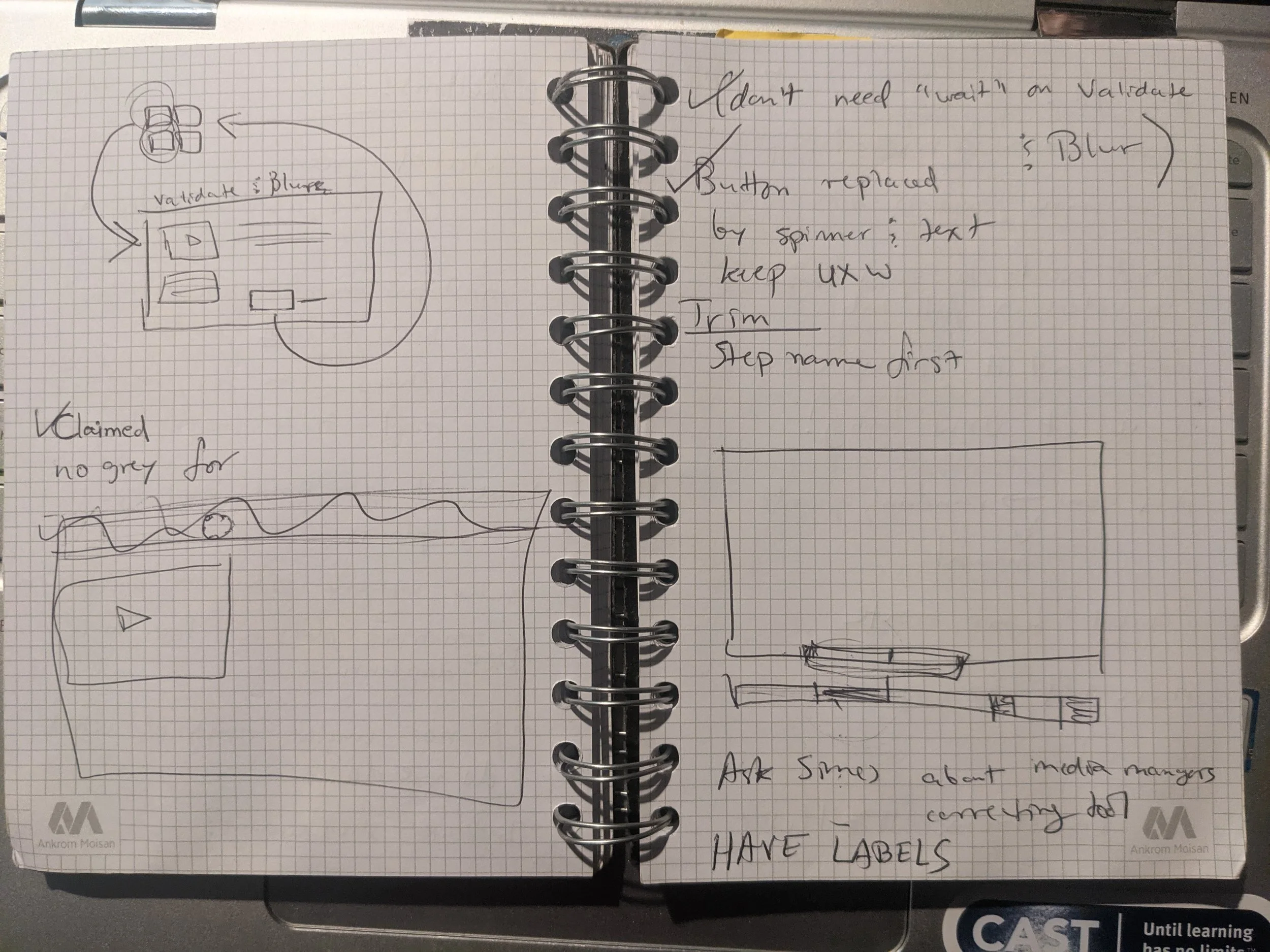

Sketches and Rough Wireframes

Questions I had while ideating:

What would each case look like when the managers weren't working on anything?

How would they know what step they were on?

What if there was something wrong with or notable about a case?

How can cases be passed off between media managers?

Is it important to show how long a case has been in the queue while it is processing?

08

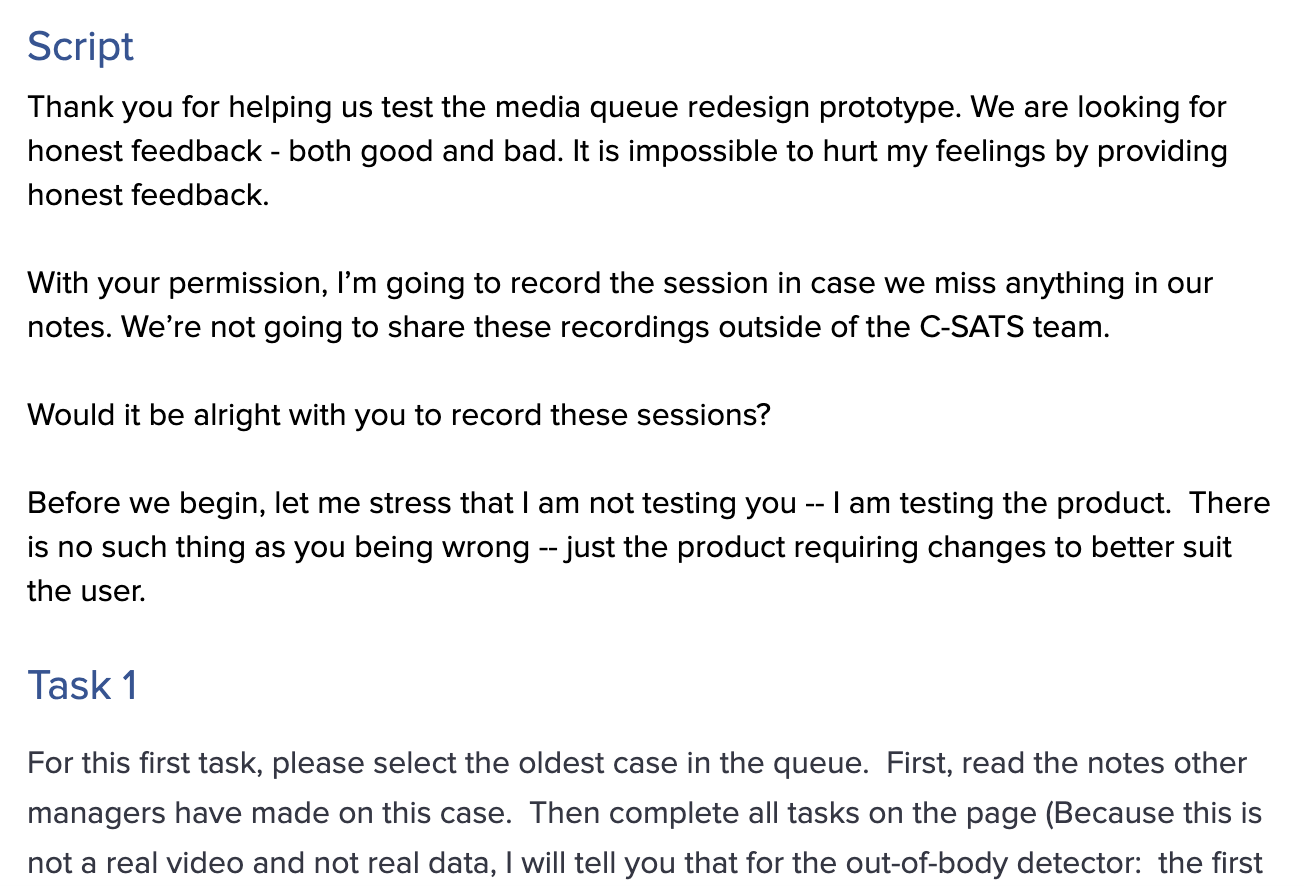

Usability Testing

This is an excerpt from the script from the test.

Range of Experience

I conducted usability tests with all media managers and multiple non-media management team employees, to determine the usability for both extremely experienced users and brand new users of the media queue. This was especially important to understand given the likelihood of growing the media team.

User performance and feedback at this stage were overwhelmingly favorable, with a couple of adjustments to affordance placements as a result.

09

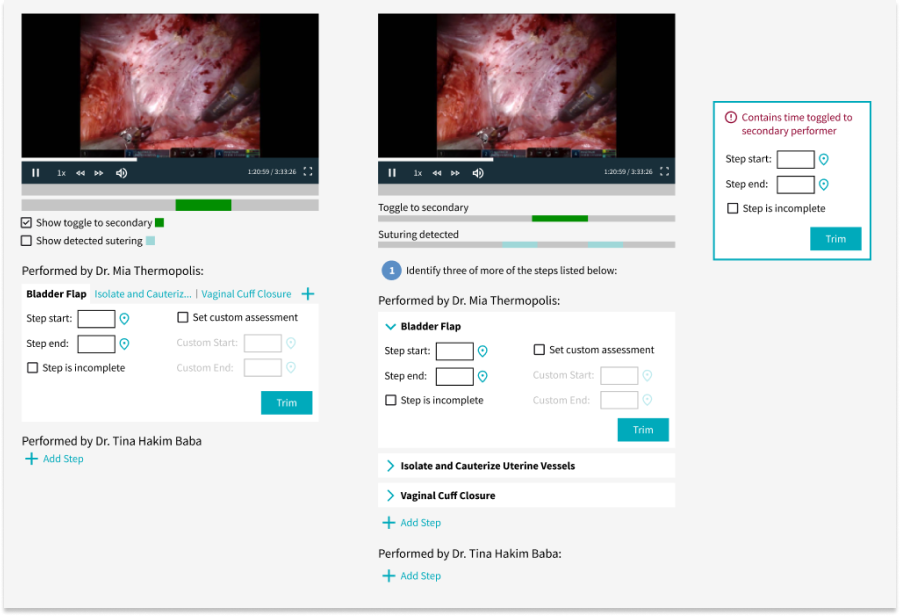

Iteration and States

Step Trimming

Here are a couple of different WIP iterations of the same step in the process -- the media managers would use the techniques detected by our ML classifiers to identify and trim steps in the video, and send them out to receive scores and feedback. These iterations contain adjustments based on the usability testing feedback, such as the ability to set custom assessments.

Fidelity

At this point, I was beginning to also upgrade the fidelity of my wireframes, to improve as much visual cleanliness and polish of the existing styles for usability as I could while keeping the scope of engineering work down.

10

Handoff

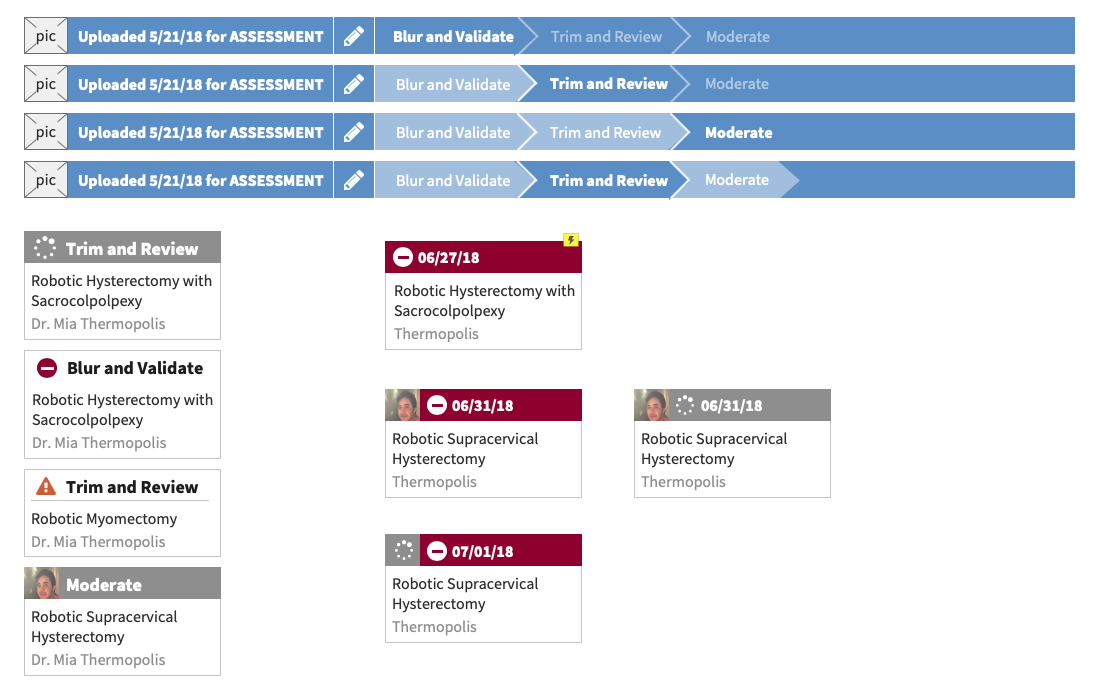

Kanban View

In this kanban view of all cases in the final Media Queue, I incorporated the following features:

Claimed, unclaimed, and processing case statuses indicated

Who is working on what case

Ability to filter cases (by hospital system, performers, specialty, modality, procedure, etc.)

Case warnings

Case notes

The consolidated 3 “lanes” of task groups:

Validate and Blur: preparing the case video and information for review

Trim and Review: identifying and trimming steps and sending them out for reivew

Moderate: last checks on case reviews before generating a report

Detailed (Step) Views

I incorporated the following features in the detailed view, based on my research findings:

Notes for media managers to mark important info about cases

Ability to archive a case from any stage

Automatic advancement past each stage in the queue once processing is complete

Ability to open less-common detailed processing tasks in another tab

Minimized scrolling

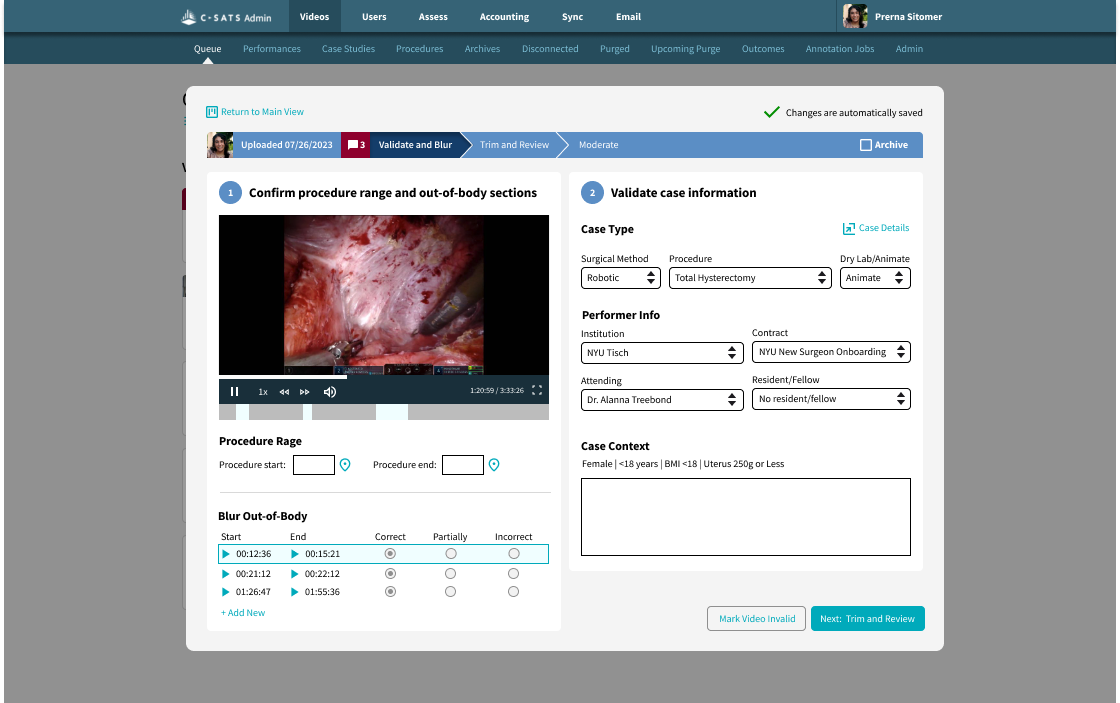

Step 1: Validate and Blur

In this the first stage, Validate and Blur, I streamlined the following features for better usability and less friction:

Trimming the start and end of procedures

Validating, invalidating, and correcting detected out-of-body footage to be blurred

Validating and correcting case info

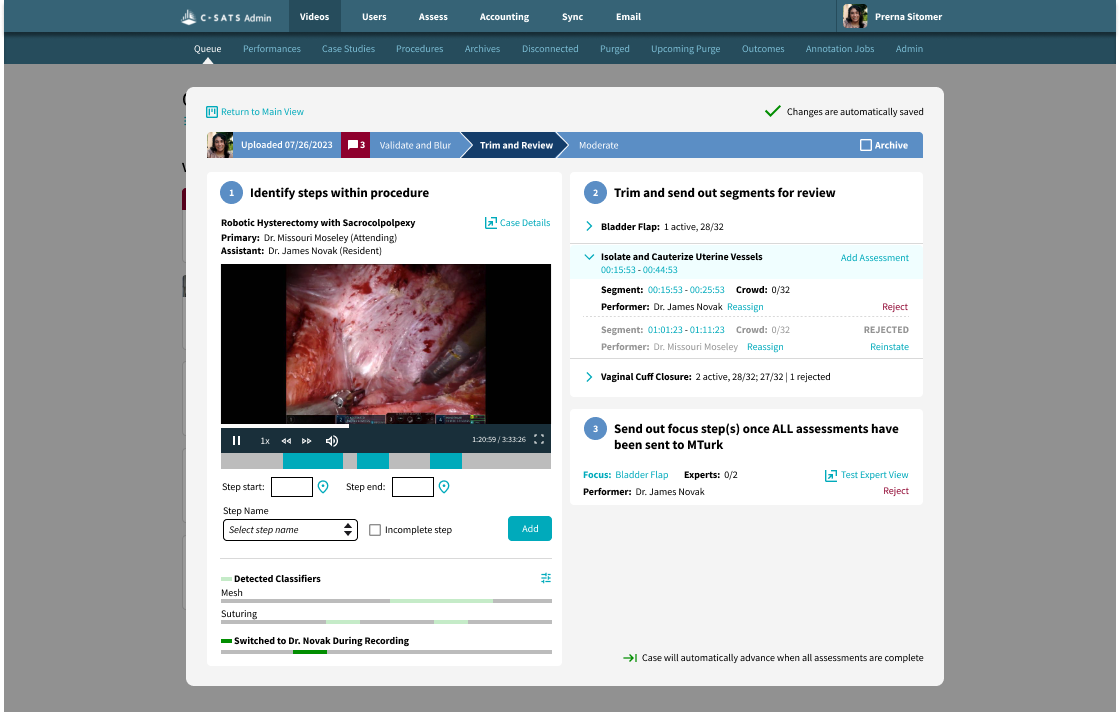

Step 2: Trim and Review

I redesigned and streamlined the following features for better usability and reduced friction in the Trim and Review stage:

Identifying steps within the procedure, including incomplete steps

Ability to view ML detected classifiers and secondary performer while trimming steps

Creating custom segments to assess for all marked steps

Ability to reject and reinstate assessments

Easily viewing the progress of crowd assessments

Easily and quickly creating expert qualitative assessments out of identified assessments

Step 3: Moderate

I redesigned and streamlined the following features for better usability and reduced friction in the Moderate stage:

Easily editing and rejecting or reinstating expert qualitative reviews

Ability to reopen a case for more qualitative feedback after rejecting feedback

Testing a completed case report

11

The Outcome

Exceeded Original Goal

As previously noted, this project exceeding the original goal: rather reducing human time spent on the Media Queue by 10%, the redesign reduced human time spent on the Media Queue by 75%.

Other Outcomes

The newly freed-up time for the media managers meant massively increased capacity for C-SATS to process cases, which was critical as the business scaled up to create contracts with more hospital systems and the influx of cases that followed.

The decreased turnaround time of case reports supports a level of consistency and perception of quality of C-SATS, and the more quickly surgeons can receive feedback, the more quickly it can be implemented in patient care.

12

User Testimonials

“This makes so much sense....I never would have thought to design it this way, but now that I see this, it feels so obvious.”

“This streamlines my work so much!”